Microsoft Data Fabric

Microsoft introduces new feature called Microsoft data fabric. Now this feature is in public preview mode. Microsoft data fabric mainly used for data analytical purpose.

All in one platform to gathering the data from multiple data sources, It can be SQL, DataLake, Dataverse, Excel, CSV etc. After fetching the data from the corresponding sources, all the data should come under one lake house as table format.

It has multiple flexibility for data analytical platform. We can implement Lake house, data warehouse or can combine these both together to transform of simplifying the data for power bi presentation.

1. Data Engineering- Data engineering enables users to design, build, and maintain infrastructures and systems that enable their organizations to collect, store, process, and analyze large volumes of data.

- Data Science experiences to empower users to complete end-to-end data science workflows for the purpose of data enrichment and business insights.

- At a high level, the process involves these steps:

- Problem formulation and ideation

- Data discovery and pre-processing

- Experimentation and modeling

- Enrich and operationalize

- Gain insights

- Unified product that addresses every aspect of their data estate by offering a complete, SaaS-ified Data, Analytics and AI platform.

4. Real Time Analytics

- Real-Time Analytics is a fully managed big data analytics platform optimized for streaming, time-series data. It contains a dedicated query language and engine with exceptional performance for searching structured, semi-structured, and unstructured data with high performance.

- Sign in to your Power BI account, or if you don't have one yet, sign up for a free trial.

- Build and implement an end-to-end lakehouse for your organization:Create a Fabric workspace

- Create a lakehouse. It includes an optional section to implement the medallion architecture that is the bronze, silver, and gold layers.

- Ingest data, transform data, and load it into the lakehouse. Load data from the bronze, silver, and gold zones as delta lake tables. You can also explore the OneLake, OneCopy of your data across lake mode and warehouse mode.

- Connect to your lakehouse using TDS/SQL endpoint and Create a Power BI report using DirectLake to analyze sales data across different dimensions.

- Optionally, you can orchestrate and schedule data ingestion and transformation flow with a pipeline.

- Clean up resources by deleting the workspace and other items.

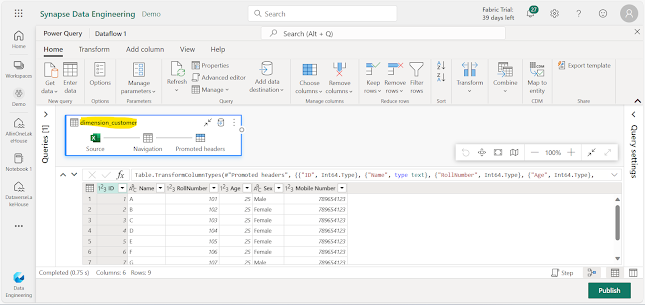

1. Select Data Engineering Option from data fabric portal Power BI (microsoft.com).

5.

15. Once we publish the flow, then the below components will get generate automatically inside of the new lake house which we created.

16. Select properties of the dataflow1 to renaming the flow.19. The same way we can import the data from SQL, Dataverse, DataLake and OData etc to this lake house.

Comments

Post a Comment